安装 CLI

安装Solana CLI

sh -c "$(curl -sSfL https://release.anza.xyz/stable/install)"

添加环境变量

export PATH="$HOME/.local/share/solana/install/active_release/bin:$PATH"

sudo vi /etc/profile

export PATH=$PATH:/usr/local/go/bin

source /etc/profile

设置集群

solana config set --url https://api.devnet.solana.com

领取测试代币

solana airdrop 5

创建代币

spl-token create-token

返回

Creating token 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT under program TokenkegQfeZyiNwAJbNbGKPFXCWuBvf9Ss623VQ5DA

Address: 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

Decimals: 9

Signature: adBFA3wdYahsRPZGWuHhFMSZ83D7Znr9M5D7SKtif31ArQWRS5HdzXa1ifEbtNZHNRmnPSce91R96fK9BdE6PwP

https://explorer.solana.com/tx/adBFA3wdYahsRPZGWuHhFMSZ83D7Znr9M5D7SKtif31ArQWRS5HdzXa1ifEbtNZHNRmnPSce91R96fK9BdE6PwP?cluster=devnet

- 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT 是部署时临时创建的一个Keypair,这个作为当前创建Token的唯一标识符

- 8TKG1ez28ZYzNgGTein6Pc97yvxWwnHecVL9ZhdZTxd5 是当前部署的地址,

js代码

import { createMint } from '@solana/spl-token';

import { clusterApiUrl, Connection, Keypair, LAMPORTS_PER_SOL } from '@solana/web3.js';

const payer = Keypair.generate();

const mintAuthority = Keypair.generate();

const freezeAuthority = Keypair.generate();

const connection = new Connection(

clusterApiUrl('devnet'),

'confirmed'

);

const mint = await createMint(

connection,

payer,

mintAuthority.publicKey,

freezeAuthority.publicKey,

9 // We are using 9 to match the CLI decimal default exactly

);

console.log(mint.toBase58());

// 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

刚部署完,没有供应量,因为还没有mint

spl-token supply 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

js代码

const mintInfo = await getMint(

connection,

mint

)

console.log(mintInfo.supply);

// 0

创建代币持有ATA账户

spl-token create-account 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

返回

Creating account 3tykH6gHjjyqYHBigukDXZaj3K6XupjKxYLMA2Srfbc2

Signature: 3jK2bbob5JqpAiXxUFTqDfKJZMZ5kpgUASVUNaWi8dp3N9bsZPEXba2rB4E9KUyUUYCmAFuYQwB7uRXGQihQfvG7

https://solscan.io/tx/3jK2bbob5JqpAiXxUFTqDfKJZMZ5kpgUASVUNaWi8dp3N9bsZPEXba2rB4E9KUyUUYCmAFuYQwB7uRXGQihQfvG7?cluster=devnet

对应js代码

const tokenAccount = await getOrCreateAssociatedTokenAccount(

connection,

payer,

mint,

payer.publicKey

)

console.log(tokenAccount.address.toBase58());

// 3tykH6gHjjyqYHBigukDXZaj3K6XupjKxYLMA2Srfbc2

查询当前账户的余额,还没mint前,余额是0

spl-token balance 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

查询的账户地址是 mint地址

铸造100个

spl-token mint 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT 100

返回

Minting 100 tokens

Token: 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

Recipient: 3tykH6gHjjyqYHBigukDXZaj3K6XupjKxYLMA2Srfbc2

Signature: 37LD7EVARr5vtA5kHLMmjXk176gxXhTakNdRnFZ4u7GKWA62oAnhtix2xBAYoyNhWECPhdvyqf1XyZBupGqoqvzF

命令行自动计算的ATA地址,逻辑简单来说

交易fee提供地址(8TKG1ez28ZYzNgGTein6Pc97yvxWwnHecVL9ZhdZTxd5)-> 调用 SPL Token程序(TokenkegQfeZyiNwAJbNbGKPFXCWuBvf9Ss623VQ5DA)-> 根据Token唯一标志(mint 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT)找到对应的Token->mint 100个代币-> 发送给交易Fee地址(8TKG1ez28ZYzNgGTein6Pc97yvxWwnHecVL9ZhdZTxd5)的ATA账户地址(3tykH6gHjjyqYHBigukDXZaj3K6XupjKxYLMA2Srfbc2)

对应的js代码

await mintTo(

connection,

payer,

mint,

tokenAccount.address,

mintAuthority,

100000000000 // because decimals for the mint are set to 9

)

查询余额

spl-token balance 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

返回 100

命令行会自动根据当前交易地址,计算对应的ATA地址,并查询余额

js代码

const tokenAccountInfo = await getAccount(

connection,

tokenAccount.address

)

console.log(tokenAccountInfo.amount);

// 0

查询已发行量

spl-token supply 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT

返回 100

查看拥有的所有代币

spl-token accounts

Token Balance

-----------------------------------------------------

34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT 100

js 代码

import {AccountLayout, TOKEN_PROGRAM_ID} from "@solana/spl-token";

import {clusterApiUrl, Connection, PublicKey} from "@solana/web3.js";

(async () => {

const connection = new Connection(clusterApiUrl('devnet'), 'confirmed');

const tokenAccounts = await connection.getTokenAccountsByOwner(

new PublicKey('8YLKoCu7NwqHNS8GzuvA2ibsvLrsg22YMfMDafxh1B15'),

{

programId: TOKEN_PROGRAM_ID,

}

);

console.log("Token Balance");

console.log("------------------------------------------------------------");

tokenAccounts.value.forEach((tokenAccount) => {

const accountData = AccountLayout.decode(tokenAccount.account.data);

console.log(`${new PublicKey(accountData.mint)} ${accountData.amount}`);

})

})();

将 SOL 包装在代币中(SOL->WSOL)

spl-token wrap 1

Wrapping 1 SOL into F3XA5sEfzS5AJbZ8733YygzcGV3UKizrGS2FteHjwemB

Signature: 3BNYUpykfpcDjFJnJMkzXPKG5Rqego3ypLx9yRfRFxKXhR25WQtGxLhyT65VtPv72bpsShBDM2b7Swuy1LwwzvkL

js代码

import {NATIVE_MINT, createAssociatedTokenAccountInstruction, getAssociatedTokenAddress, createSyncNativeInstruction, getAccount} from "@solana/spl-token";

import {clusterApiUrl, Connection, Keypair, LAMPORTS_PER_SOL, SystemProgram, Transaction, sendAndConfirmTransaction} from "@solana/web3.js";

(async () => {

const connection = new Connection(clusterApiUrl('devnet'), 'confirmed');

const wallet = Keypair.generate();

const airdropSignature = await connection.requestAirdrop(

wallet.publicKey,

2 * LAMPORTS_PER_SOL,

);

await connection.confirmTransaction(airdropSignature);

const associatedTokenAccount = await getAssociatedTokenAddress(

NATIVE_MINT,

wallet.publicKey

)

// Create token account to hold your wrapped SOL

const ataTransaction = new Transaction()

.add(

createAssociatedTokenAccountInstruction(

wallet.publicKey,

associatedTokenAccount,

wallet.publicKey,

NATIVE_MINT

)

);

await sendAndConfirmTransaction(connection, ataTransaction, [wallet]);

// Transfer SOL to associated token account and use SyncNative to update wrapped SOL balance

const solTransferTransaction = new Transaction()

.add(

SystemProgram.transfer({

fromPubkey: wallet.publicKey,

toPubkey: associatedTokenAccount,

lamports: LAMPORTS_PER_SOL

}),

createSyncNativeInstruction(

associatedTokenAccount

)

)

await sendAndConfirmTransaction(connection, solTransferTransaction, [wallet]);

const accountInfo = await getAccount(connection, associatedTokenAccount);

console.log(`Native: ${accountInfo.isNative}, Lamports: ${accountInfo.amount}`);

})();

逻辑上简单说就是给对应的NATIVE_MINT所属ATA地址转SOL

WSOL ->SOL 赎回

spl-token unwrap F3XA5sEfzS5AJbZ8733YygzcGV3UKizrGS2FteHjwemB

Unwrapping F3XA5sEfzS5AJbZ8733YygzcGV3UKizrGS2FteHjwemB

Amount: 1 SOL

Recipient: 8TKG1ez28ZYzNgGTein6Pc97yvxWwnHecVL9ZhdZTxd5

Signature: 3dsKnJHHFhBqUxeVf2JLX5WnGmBFqhy2hEC5jeGBhBHFFKQMWmVtVMCcoyjSdBTF8Y61eArYYizjZVT9yp3TXKhZ

代币转移

逻辑简单来说

发送From账户地址的ATA账户->给接收To地址对应的ATA地址进行转账

spl-token transfer 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT 50 C1XZNEpofMrDmV17SNzyqaygGo82yVPvGYNQFufMEZCd

如果接收地址没有该代币的ATA,需要添加 --allow-unfunded-recipient,已表明为该ATA提供创建租金

spl-token transfer 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT 50 C1XZNEpofMrDmV17SNzyqaygGo82yVPvGYNQFufMEZCd --allow-unfunded-recipient --fund-recipient

js代码

import { clusterApiUrl, Connection, Keypair, LAMPORTS_PER_SOL } from '@solana/web3.js';

import { createMint, getOrCreateAssociatedTokenAccount, mintTo, transfer } from '@solana/spl-token';

(async () => {

// Connect to cluster

const connection = new Connection(clusterApiUrl('devnet'), 'confirmed');

// Generate a new wallet keypair and airdrop SOL

const fromWallet = Keypair.generate();

const fromAirdropSignature = await connection.requestAirdrop(fromWallet.publicKey, LAMPORTS_PER_SOL);

// Wait for airdrop confirmation

await connection.confirmTransaction(fromAirdropSignature);

// Generate a new wallet to receive newly minted token

const toWallet = Keypair.generate();

// Create new token mint

const mint = await createMint(connection, fromWallet, fromWallet.publicKey, null, 9);

// Get the token account of the fromWallet address, and if it does not exist, create it

const fromTokenAccount = await getOrCreateAssociatedTokenAccount(

connection,

fromWallet,

mint,

fromWallet.publicKey

);

// Get the token account of the toWallet address, and if it does not exist, create it

const toTokenAccount = await getOrCreateAssociatedTokenAccount(connection, fromWallet, mint, toWallet.publicKey);

// Mint 1 new token to the "fromTokenAccount" account we just created

let signature = await mintTo(

connection,

fromWallet,

mint,

fromTokenAccount.address,

fromWallet.publicKey,

1000000000

);

console.log('mint tx:', signature);

// Transfer the new token to the "toTokenAccount" we just created

signature = await transfer(

connection,

fromWallet,

fromTokenAccount.address,

toTokenAccount.address,

fromWallet.publicKey,

50

);

})();

查询余额

查询本地账户下

spl-token accounts 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT -v

Program Account Delegated Close Authority Balance

-------------------------------------------------------------------------------------------------------------------------------

TokenkegQfeZyiNwAJbNbGKPFXCWuBvf9Ss623VQ5DA 3tykH6gHjjyqYHBigukDXZaj3K6XupjKxYLMA2Srfbc2 100

js 代码

import {getAccount, createMint, createAccount, mintTo, getOrCreateAssociatedTokenAccount, transfer} from "@solana/spl-token";

import {clusterApiUrl, Connection, Keypair, LAMPORTS_PER_SOL} from "@solana/web3.js";

(async () => {

const connection = new Connection(clusterApiUrl('devnet'), 'confirmed');

const wallet = Keypair.generate();

const auxiliaryKeypair = Keypair.generate();

const airdropSignature = await connection.requestAirdrop(

wallet.publicKey,

LAMPORTS_PER_SOL,

);

await connection.confirmTransaction(airdropSignature);

const mint = await createMint(

connection,

wallet,

wallet.publicKey,

wallet.publicKey,

9

);

// Create custom token account

const auxiliaryTokenAccount = await createAccount(

connection,

wallet,

mint,

wallet.publicKey,

auxiliaryKeypair

);

const associatedTokenAccount = await getOrCreateAssociatedTokenAccount(

connection,

wallet,

mint,

wallet.publicKey

);

await mintTo(

connection,

wallet,

mint,

associatedTokenAccount.address,

wallet,

50

);

const accountInfo = await getAccount(connection, associatedTokenAccount.address);

console.log(accountInfo.amount);

// 50

await transfer(

connection,

wallet,

associatedTokenAccount.address,

auxiliaryTokenAccount,

wallet,

50

);

const auxAccountInfo = await getAccount(connection, auxiliaryTokenAccount);

console.log(auxAccountInfo.amount);

// 50

})();

创建非同质化代币

创建小数点后为零的 token 类型,

流程与上面的一致

禁用mint

spl-token authorize 34GbKbRzLMfvpHEFywhe2KyheKMMxab8tQAFj5t2rBYT mint --disable

js代码

let transaction = new Transaction()

.add(createSetAuthorityInstruction(

mint,

wallet.publicKey,

AuthorityType.MintTokens,

null

));

await web3.sendAndConfirmTransaction(connection, transaction, [wallet]);

将mint权限更新为null

多重签名的使用

引用多重签名账户时命令行用法的主要区别spl-token在于指定--owner参数。通常,此参数指定的签名者直接提供授予其权限的签名,但在多重签名的情况下,它仅指向多重签名账户的地址。然后由参数指定的多重签名签名者集成员提供签名 --multisig-signer。

任何拥有 SPL Token 铸币或代币账户的机构都可以使用多重签名账户。{

- 铸币账户铸币权限:

spl-token mint ..., spl-token authorize ... mint ...

- Mint账户 冻结权限:

spl-token freeze ...,, spl-token thaw ...``spl-token authorize ... freeze ...

- Token 账户 所有者 权限 :

spl-token transfer ...,,,,,,, spl-token approve ...``spl-token revoke ...``spl-token burn ...``spl-token wrap ...``spl-token unwrap ...``spl-token authorize ... owner ...

- Token 账户关闭权限:

spl-token close ..., spl-token authorize ... close ...

}

使用多重签名的主要区别在于将所有者指定为多重签名密钥,并在构建交易时提供签名者列表。通常,您会提供有权运行交易的签名者作为所有者,但在多重签名的情况下,所有者将是多重签名密钥。

任何拥有 SPL Token 铸币或代币账户的机构都可以使用多重签名账户。

{

- 铸币账户铸币权限:

createMint(/* ... */, mintAuthority: multisigKey, /* ... */)

- Mint账户冻结权限:

createMint(/* ... */, freezeAuthority: multisigKey, /* ... */)

- 代币账户所有者权限:

getOrCreateAssociatedTokenAccount(/* ... */, mintAuthority: multisigKey, /* ... */)

- Token账户关闭权限:

closeAccount(/* ... */, authority: multisigKey, /* ... */)

}

示例:具有多重签名权限的 Mint

首先创建密钥对作为多重签名者集。实际上,这些可以是任何受支持的签名者,例如:Ledger 硬件钱包、密钥对文件或纸钱包。为方便起见,本示例将使用生成的密钥对。

$ for i in $(seq 3); do solana-keygen new --no-passphrase -so "signer-${i}.json"; done

Wrote new keypair to signer-1.json

Wrote new keypair to signer-2.json

Wrote new keypair to signer-3.json

const signer1 = Keypair.generate();

const signer2 = Keypair.generate();

const signer3 = Keypair.generate();

为了创建多重签名账户,必须收集签名者集的公钥。

$ for i in $(seq 3); do SIGNER="signer-${i}.json"; echo "$SIGNER: $(solana-keygen pubkey "$SIGNER")"; done

signer-1.json: BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ

signer-2.json: DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY

signer-3.json: D7ssXHrZJjfpZXsmDf8RwfPxe1BMMMmP1CtmX3WojPmG

console.log(signer1.publicKey.toBase58());

console.log(signer2.publicKey.toBase58());

console.log(signer3.publicKey.toBase58());

/*

BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ

DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY

D7ssXHrZJjfpZXsmDf8RwfPxe1BMMMmP1CtmX3WojPmG

*/

现在可以使用子命令创建多重签名帐户。其第一个位置参数是必须签署影响此多重签名帐户控制的 token/mint 帐户的交易的spl-token create-multisig 最小签名者数量 ( )。其余位置参数是允许 ( ) 为多重签名帐户签名的所有密钥对的公钥。此示例将使用“2 of 3”多重签名帐户。也就是说,三个允许的密钥对中的两个必须签署所有交易。M``N

注意:SPL Token Multisig 帐户仅限于 11 个签名者(1 {'<='} N{'<='} 11),并且最低签名者数量不得超过N(1 {'<='} M{'<='} N)

$ spl-token create-multisig 2 BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ \

DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY D7ssXHrZJjfpZXsmDf8RwfPxe1BMMMmP1CtmX3WojPmG

Creating 2/3 multisig 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re

Signature: 2FN4KXnczAz33SAxwsuevqrD1BvikP6LUhLie5Lz4ETt594X8R7yvMZzZW2zjmFLPsLQNHsRuhQeumExHbnUGC9A

const multisigKey = await createMultisig(

connection,

payer,

[

signer1.publicKey,

signer2.publicKey,

signer3.publicKey

],

2

);

console.log(`Created 2/3 multisig ${multisigKey.toBase58()}`);

// Created 2/3 multisig 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re

接下来,按照前面描述的方式创建代币铸造账户和接收账户 ,并将铸造账户的铸造权限设置为多重签名账户

$ spl-token create-token

Creating token 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Signature: 3n6zmw3hS5Hyo5duuhnNvwjAbjzC42uzCA3TTsrgr9htUonzDUXdK1d8b8J77XoeSherqWQM8mD8E1TMYCpksS2r

$ spl-token create-account 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Creating account EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC

Signature: 5mVes7wjE7avuFqzrmSCWneKBQyPAjasCLYZPNSkmqmk2YFosYWAP9hYSiZ7b7NKpV866x5gwyKbbppX3d8PcE9s

$ spl-token authorize 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o mint 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re

Updating 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Current mint authority: 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE

New mint authority: 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re

Signature: yy7dJiTx1t7jvLPCRX5RQWxNRNtFwvARSfbMJG94QKEiNS4uZcp3GhhjnMgZ1CaWMWe4jVEMy9zQBoUhzomMaxC

const mint = await createMint(

connection,

payer,

multisigKey,

multisigKey,

9

);

const associatedTokenAccount = await getOrCreateAssociatedTokenAccount(

connection,

payer,

mint,

signer1.publicKey

);

为了证明铸币账户现在处于多重签名账户的控制之下,尝试使用一个多重签名者进行铸币会失败

$ spl-token mint 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o 1 EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC \

--owner 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re \

--multisig-signer signer-1.json

Minting 1 tokens

Token: 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Recipient: EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC

RPC response error -32002: Transaction simulation failed: Error processing Instruction 0: missing required signature for instruction

try {

await mintTo(

connection,

payer,

mint,

associatedTokenAccount.address,

multisigKey,

1

)

} catch (error) {

console.log(error);

}

// Error: Signature verification failed

但使用第二个多重签名者重复操作,成功了

$ spl-token mint 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o 1 EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC \

--owner 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re \

--multisig-signer signer-1.json \

--multisig-signer signer-2.json

Minting 1 tokens

Token: 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Recipient: EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC

Signature: 2ubqWqZb3ooDuc8FLaBkqZwzguhtMgQpgMAHhKsWcUzjy61qtJ7cZ1bfmYktKUfnbMYWTC1S8zdKgU6m4THsgspT

await mintTo(

connection,

payer,

mint,

associatedTokenAccount.address,

multisigKey,

1,

[

signer1,

signer2

]

)

const mintInfo = await getMint(

connection,

mint

)

console.log(`Minted ${mintInfo.supply} token`);

// Minted 1 token

示例:使用多重签名进行离线签名

有时在线签名不可行或不受欢迎。例如,当签名者不在同一地理位置,或使用未连接到网络的隔离设备时。在这种情况下,我们使用离线签名,它将前面的多重签名示例与离线签名 和nonce 帐户结合起来。

此示例将使用与在线示例相同的 mint 帐户、token 帐户、多重签名帐户和多重签名者设置密钥对文件名,以及我们在此处创建的 nonce 帐户:

$ solana-keygen new -o nonce-keypair.json

...

======================================================================

pubkey: Fjyud2VXixk2vCs4DkBpfpsq48d81rbEzh6deKt7WvPj

======================================================================

$ solana create-nonce-account nonce-keypair.json 1

Signature: 3DALwrAAmCDxqeb4qXZ44WjpFcwVtgmJKhV4MW5qLJVtWeZ288j6Pzz1F4BmyPpnGLfx2P8MEJXmqPchX5y2Lf3r

$ solana nonce-account Fjyud2VXixk2vCs4DkBpfpsq48d81rbEzh6deKt7WvPj

Balance: 0.01 SOL

Minimum Balance Required: 0.00144768 SOL

Nonce blockhash: 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E

Fee: 5000 lamports per signature

Authority: 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE

const connection = new Connection(

clusterApiUrl('devnet'),

'confirmed',

);

const onlineAccount = Keypair.generate();

const nonceAccount = Keypair.generate();

const minimumAmount = await connection.getMinimumBalanceForRentExemption(

NONCE_ACCOUNT_LENGTH,

);

// Form CreateNonceAccount transaction

const transaction = new Transaction()

.add(

SystemProgram.createNonceAccount({

fromPubkey: onlineAccount.publicKey,

noncePubkey: nonceAccount.publicKey,

authorizedPubkey: onlineAccount.publicKey,

lamports: minimumAmount,

}),

);

await web3.sendAndConfirmTransaction(connection, transaction, [onlineAccount, nonceAccount])

const nonceAccountData = await connection.getNonce(

nonceAccount.publicKey,

'confirmed',

);

console.log(nonceAccountData);

/*

NonceAccount {

authorizedPubkey: '5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE'

nonce: '6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E',

feeCalculator: { lamportsPerSignature: 5000 }

}

*/

对于费用支付者和随机数授权者角色, 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE将使用本地热钱包。

首先,通过指定所有签名者的公钥来构建模板命令。运行此命令后,所有签名者将在输出中列为“缺席签名者”。每个离线签名者都将运行此命令以生成相应的签名。

注意:该参数的参数--blockhash是来自指定持久随机数账户的“Nonce blockhash:”字段。

$ spl-token mint 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o 1 EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC \

--owner 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re \

--multisig-signer BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ \

--multisig-signer DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY \

--blockhash 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E \

--fee-payer 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE \

--nonce Fjyud2VXixk2vCs4DkBpfpsq48d81rbEzh6deKt7WvPj \

--nonce-authority 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE \

--sign-only \

--mint-decimals 9

Minting 1 tokens

Token: 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Recipient: EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC

Blockhash: 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E

Absent Signers (Pubkey):

5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE

BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ

DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY

接下来,每个离线签名者执行模板命令,用相应的密钥对替换他们的公钥的每个实例。

$ spl-token mint 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o 1 EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC \

--owner 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re \

--multisig-signer signer-1.json \

--multisig-signer DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY \

--blockhash 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E \

--fee-payer 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE \

--nonce Fjyud2VXixk2vCs4DkBpfpsq48d81rbEzh6deKt7WvPj \

--nonce-authority 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE \

--sign-only \

--mint-decimals 9

Minting 1 tokens

Token: 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Recipient: EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC

Blockhash: 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E

Signers (Pubkey=Signature):

BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ=2QVah9XtvPAuhDB2QwE7gNaY962DhrGP6uy9zeN4sTWvY2xDUUzce6zkQeuT3xg44wsgtUw2H5Rf8pEArPSzJvHX

Absent Signers (Pubkey):

5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE

DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY

$ spl-token mint 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o 1 EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC \

--owner 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re \

--multisig-signer BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ \

--multisig-signer signer-2.json \

--blockhash 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E \

--fee-payer 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE \

--nonce Fjyud2VXixk2vCs4DkBpfpsq48d81rbEzh6deKt7WvPj \

--nonce-authority 5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE \

--sign-only \

--mint-decimals 9

Minting 1 tokens

Token: 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Recipient: EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC

Blockhash: 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E

Signers (Pubkey=Signature):

DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY=2brZbTiCfyVYSCp6vZE3p7qCDeFf3z1JFmJHPBrz8SnWSDZPjbpjsW2kxFHkktTNkhES3y6UULqS4eaWztLW7FrU

Absent Signers (Pubkey):

5hbZyJ3KRuFvdy5QBxvE9KwK17hzkAUkQHZTxPbiWffE

BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ

最后,离线签名者将Pubkey=Signature其命令输出的配对传达给将交易广播到集群的一方。广播方随后在修改模板命令后运行该命令,如下所示:

- 用其密钥对替换任何相应的公钥(在此示例中

--fee-payer ... 为)--nonce-authority ...

- 删除

--sign-only参数,如果是mint子命令,则--mint-decimals ...删除将从集群中查询的参数

--signer通过参数将离线签名添加到模板命令

$ spl-token mint 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o 1 EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC \

--owner 46ed77fd4WTN144q62BwjU2B3ogX3Xmmc8PT5Z3Xc2re \

--multisig-signer BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ \

--multisig-signer DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY \

--blockhash 6DPt2TfFBG7sR4Hqu16fbMXPj8ddHKkbU4Y3EEEWrC2E \

--fee-payer hot-wallet.json \

--nonce Fjyud2VXixk2vCs4DkBpfpsq48d81rbEzh6deKt7WvPj \

--nonce-authority hot-wallet.json \

--signer BzWpkuRrwXHq4SSSFHa8FJf6DRQy4TaeoXnkA89vTgHZ=2QVah9XtvPAuhDB2QwE7gNaY962DhrGP6uy9zeN4sTWvY2xDUUzce6zkQeuT3xg44wsgtUw2H5Rf8pEArPSzJvHX \

--signer DhkUfKgfZ8CF6PAGKwdABRL1VqkeNrTSRx8LZfpPFVNY=2brZbTiCfyVYSCp6vZE3p7qCDeFf3z1JFmJHPBrz8SnWSDZPjbpjsW2kxFHkktTNkhES3y6UULqS4eaWztLW7FrU

Minting 1 tokens

Token: 4VNVRJetwapjwYU8jf4qPgaCeD76wyz8DuNj8yMCQ62o

Recipient: EX8zyi2ZQUuoYtXd4MKmyHYLTjqFdWeuoTHcsTdJcKHC

Signature: 2AhZXVPDBVBxTQLJohyH1wAhkkSuxRiYKomSSXtwhPL9AdF3wmhrrJGD7WgvZjBPLZUFqWrockzPp9S3fvzbgicy

首先使用 nonceAccountInformation 和 tokenAccount 密钥构建原始交易。交易的所有签名者都被视为原始交易的一部分。此交易稍后将交给签名者进行签名。

const nonceAccountInfo = await connection.getAccountInfo(

nonceAccount.publicKey,

'confirmed'

);

const nonceAccountFromInfo = web3.NonceAccount.fromAccountData(nonceAccountInfo.data);

console.log(nonceAccountFromInfo);

const nonceInstruction = web3.SystemProgram.nonceAdvance({

authorizedPubkey: onlineAccount.publicKey,

noncePubkey: nonceAccount.publicKey

});

const nonce = nonceAccountFromInfo.nonce;

const mintToTransaction = new web3.Transaction({

feePayer: onlineAccount.publicKey,

nonceInfo: {nonce, nonceInstruction}

})

.add(

createMintToInstruction(

mint,

associatedTokenAccount.address,

multisigkey,

1,

[

signer1,

onlineAccount

],

TOKEN_PROGRAM_ID

)

);

接下来,每个离线签名者将获取交易缓冲区并使用其相应的密钥对其进行签名。

let mintToTransactionBuffer = mintToTransaction.serializeMessage();

let onlineSIgnature = nacl.sign.detached(mintToTransactionBuffer, onlineAccount.secretKey);

mintToTransaction.addSignature(onlineAccount.publicKey, onlineSIgnature);

// Handed to offline signer for signature

let offlineSignature = nacl.sign.detached(mintToTransactionBuffer, signer1.secretKey);

mintToTransaction.addSignature(signer1.publicKey, offlineSignature);

let rawMintToTransaction = mintToTransaction.serialize();

最后,热钱包将接收交易、对其进行序列化并将其广播到网络。

// Send to online signer for broadcast to network

await web3.sendAndConfirmRawTransaction(connection, rawMintToTransaction);

JSON RPC 方法

有一组丰富的 JSON RPC 方法可用于 SPL Token:

getTokenAccountBalancegetTokenAccountsByDelegategetTokenAccountsByOwnergetTokenLargestAccountsgetTokenSupply

有关更多详细信息,请参阅https://docs.solana.com/apps/jsonrpc-api。

getProgramAccounts此外,可以以多种方式采用多功能JSON RPC 方法来获取感兴趣的 SPL 代币账户。

查找特定铸币厂的所有代币账户

查找TESTpKgj42ya3st2SQTKiANjTBmncQSCqLAZGcSPLGM铸币厂的所有代币账户:

curl http://api.mainnet-beta.solana.com -X POST -H "Content-Type: application/json" -d '

{

"jsonrpc": "2.0",

"id": 1,

"method": "getProgramAccounts",

"params": [

"TokenkegQfeZyiNwAJbNbGKPFXCWuBvf9Ss623VQ5DA",

{

"encoding": "jsonParsed",

"filters": [

{

"dataSize": 165

},

{

"memcmp": {

"offset": 0,

"bytes": "TESTpKgj42ya3st2SQTKiANjTBmncQSCqLAZGcSPLGM"

}

}

]

}

]

}

'

过滤"dataSize": 165器选择所有代币账户,然后"memcmp": ...过滤器根据 每个代币账户内的铸币 地址进行选择。

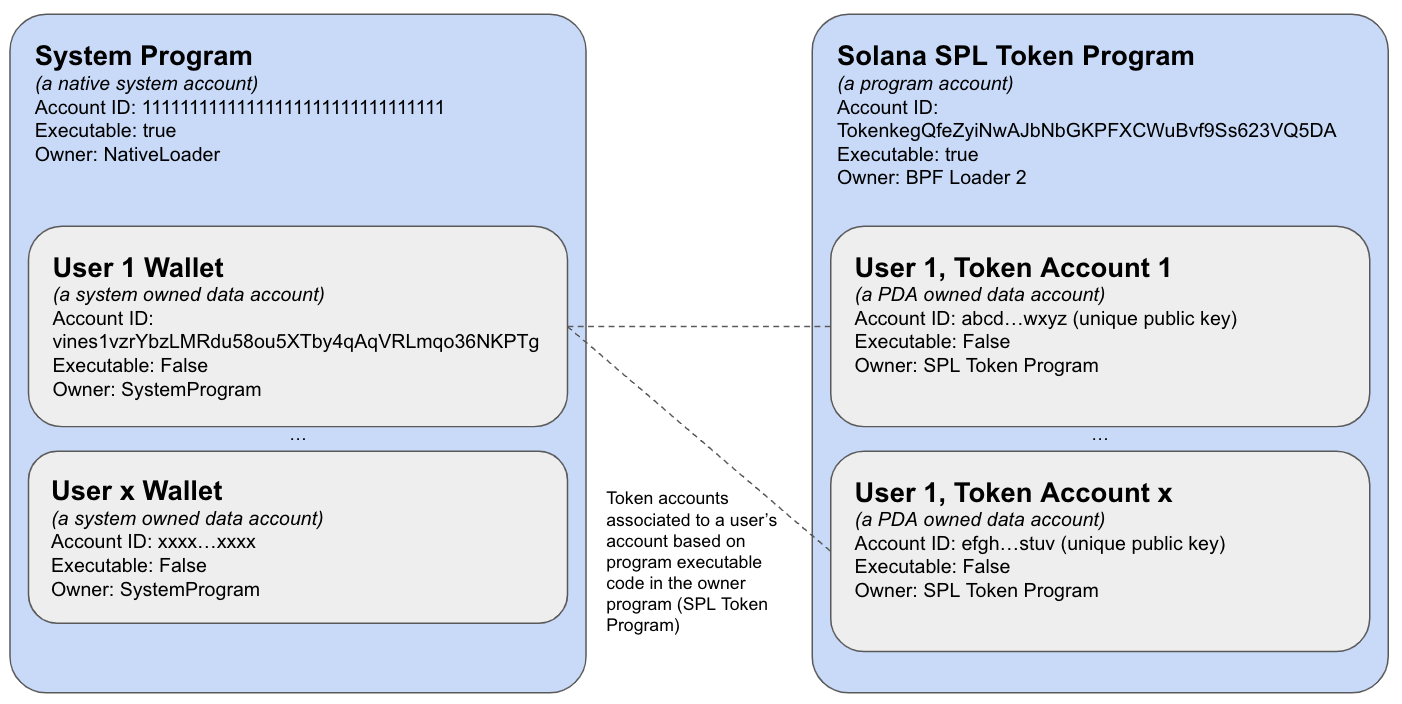

查找钱包的所有代币账户

查找用户拥有的所有代币账户vines1vzrYbzLMRdu58ou5XTby4qAqVRLmqo36NKPTg:

curl http://api.mainnet-beta.solana.com -X POST -H "Content-Type: application/json" -d '

{

"jsonrpc": "2.0",

"id": 1,

"method": "getProgramAccounts",

"params": [

"TokenkegQfeZyiNwAJbNbGKPFXCWuBvf9Ss623VQ5DA",

{

"encoding": "jsonParsed",

"filters": [

{

"dataSize": 165

},

{

"memcmp": {

"offset": 32,

"bytes": "vines1vzrYbzLMRdu58ou5XTby4qAqVRLmqo36NKPTg"

}

}

]

}

]

}

'

过滤"dataSize": 165器选择所有代币账户,然后"memcmp": ...过滤器根据 每个代币账户内的所有者 地址进行选择。

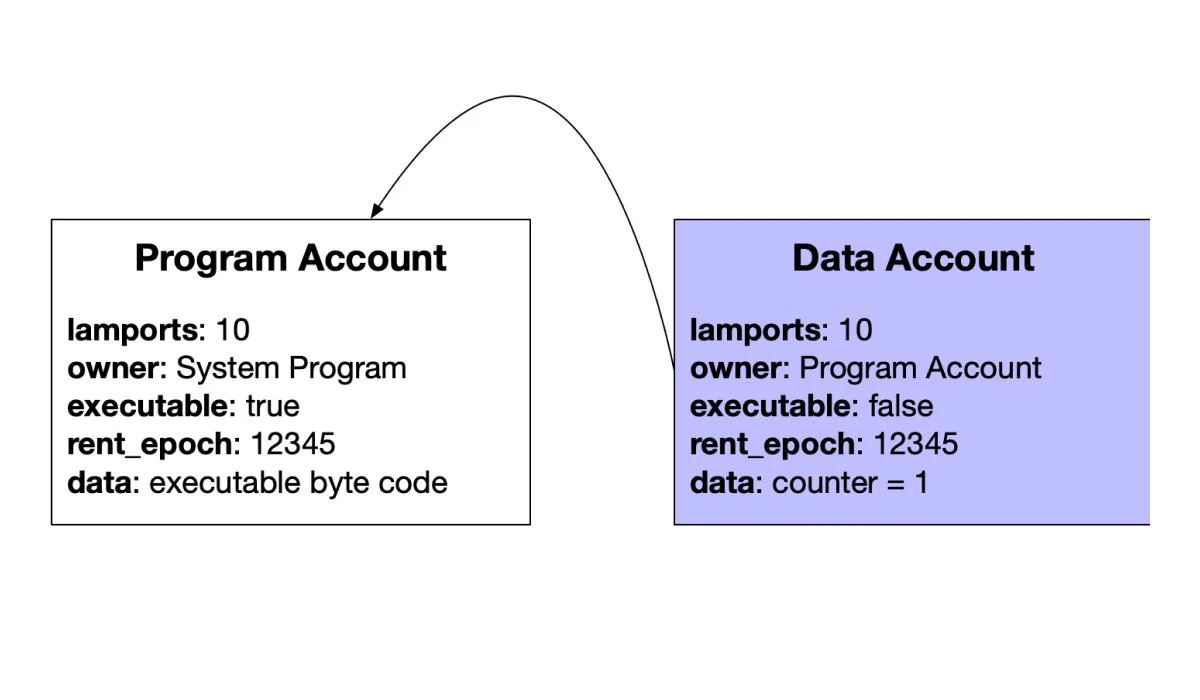

运营概况

创建新的令牌类型

可以通过使用指令初始化新的 Mint 来创建新的代币类型 InitializeMint。Mint 用于创建或“铸造”新代币,这些代币存储在帐户中。Mint 与每个帐户相关联,这意味着特定代币类型的总供应量等于所有关联帐户的余额。

需要注意的是,该InitializeMint指令不需要 Solana 帐户初始化,也不需要签名者。该InitializeMint 指令应与创建 Solana 帐户的系统指令一起进行原子处理,方法是将两个指令都包含在同一笔交易中。

一旦铸币厂初始化完毕,mint_authority就可以使用 指令创建新代币 MintTo。只要铸币厂包含有效的mint_authority,铸币厂就被视为具有非固定供应,可以随时mint_authority使用 指令创建新代币。 指令可用于不可逆地将铸币厂的权限设置为,从而使铸币厂的供应固定。 永远无法铸造更多代币。MintTo``SetAuthority``None

可以随时通过发出Burn从账户中删除和丢弃代币的指令来减少代币供应。

创建帐户

账户持有代币余额,并使用指令创建InitializeAccount 。每个账户都有一个所有者,该所有者必须作为签名者出现在某些指令中。

账户所有者可以使用 SetAuthority指令将账户所有权转让给另一个账户。

需要注意的是,该InitializeAccount指令不需要 Solana 帐户初始化,也不需要签名者。该InitializeAccount 指令应与创建 Solana 帐户的系统指令一起进行原子处理,方法是将两个指令都包含在同一笔交易中。

转移代币

可以使用指令在账户之间转移余额。 当源账户和目标账户不同时,Transfer源账户的所有者必须作为指令中的签名者在场。Transfer

需要注意的是,当 的源和目标相同时Transfer,总是会成功。因此, 成功并不一定意味着所涉及的帐户是有效的 SPL 代币帐户,任何代币都被移动,或者源帐户作为签名者存在。我们强烈建议开发人员在 从其程序中调用指令之前 仔细检查源和目标是否不同。Transfer``Transfer``Transfer

燃烧

该Burn指令会减少一个账户的代币余额,而无需转移到另一个账户,从而有效地永久地将代币从流通中移除。

链上没有其他方式可以减少供应量。这类似于向未知私钥的账户转账或销毁私钥。但使用Burn指令进行销毁的行为更明确,并且可以被任何一方在链上确认。

权限委托

账户所有者可以使用指令委托部分或全部代币余额的权限Approve。被委托的权限可以转移或销毁其被委托的金额。账户所有者可以通过Revoke指令撤销权限委托。

多重签名

支持 M of N 多重签名,可用于代替 Mint 授权机构或帐户所有者或代表。多重签名授权机构必须使用InitializeMultisig指令进行初始化。初始化指定一组有效的 N 个公钥,以及必须作为指令签名者存在的 N 个公钥的数量 M,以使授权机构合法。

需要注意的是,该InitializeMultisig指令不需要 Solana 帐户初始化,也不需要签名者。该 InitializeMultisig指令应与创建 Solana 帐户的系统指令一起进行原子处理,方法是将两个指令都包含在同一笔交易中。

此外,多重签名允许在签名者集合中存在重复账户,从而实现非常简单的加权系统。例如,可以使用 3 个唯一公钥构建 2/4 多重签名,并将一个公钥指定两次以赋予该公钥双倍投票权。

冻结账户

铸币厂还可能包含一个freeze_authority,可用于发出 FreezeAccount指令,使帐户无法使用。包含冻结帐户的代币指令将失败,直到使用该ThawAccount指令解冻帐户。该SetAuthority指令可用于更改铸币厂的freeze_authority。如果铸币厂的freeze_authority设置为, None则帐户冻结和解冻将被永久禁用,并且所有当前冻结的帐户也将永久冻结。

包装 SOL

Token 程序可用于包装本机 SOL。这样做允许本机 SOL 像任何其他 Token 程序令牌类型一样被处理,并且在从与 Token 程序接口交互的其他程序调用时非常有用。

包含包装的 SOL 的账户使用公钥与称为“Native Mint”的特定 Mint 相关联 So11111111111111111111111111111111111111112。

这些帐户有一些独特的行为

InitializeAccount将初始化 Account 的余额设置为正在初始化的 Solana 账户的 SOL 余额,从而得到与 SOL 余额相等的代币余额。- 转账不仅会修改代币余额,还会将等量的 SOL 从源账户转移到目标账户。

- 不支持刻录

- 关闭帐户时余额可能不为零。

无论当前包装了多少 SOL,Native Mint 的供应量始终会报告 0。

免租

为了确保供应量计算的可靠性、Mint 的一致性和 Multisig 账户的一致性,所有持有 Account、Mint 或 Multisig 的 Solana 账户必须包含足够的 SOL 才能被视为免租

关闭账户

可以使用指令关闭帐户CloseAccount。关闭帐户时,所有剩余的 SOL 将转移到另一个 Solana 帐户(不必与代币计划相关联)。非本地帐户的余额必须为零才能关闭。

非同质化代币

NFT 只是一种代币类型,其中只铸造了一个代币。

钱包集成指南

本节介绍如何将 SPL Token 支持集成到支持原生 SOL 的现有钱包中。它假设一个模型,即用户有一个系统帐户作为他们发送和接收 SOL 的主要钱包地址。

虽然所有 SPL Token 账户都有自己的链上地址,但没有必要向用户显示这些额外的地址。

钱包使用两个程序:

- SPL 代币程序:所有 SPL 代币使用的通用程序

- SPL 关联代币账户计划:定义约定并提供将用户的钱包地址映射到其持有的关联代币账户的机制。

如何获取并显示代币持有量

getTokenAccountsByOwner JSON RPC方法 可用于获取钱包地址的所有代币账户。

对于每个代币铸造,钱包可以有多个代币账户:相关代币账户和/或其他辅助代币账户

按照惯例,建议钱包将同一代币铸币厂的所有代币账户的余额汇总为用户的单一余额,以免受这种复杂性的影响。

请参阅垃圾收集辅助代币账户 部分,了解有关钱包如何代表用户清理辅助代币账户的建议。

关联代币账户

在用户可以接收代币之前,必须在链上创建其关联的代币账户,并需要少量 SOL 将该账户标记为免租金。

对于谁可以创建用户的关联代币账户没有任何限制。它可以由钱包代表用户创建,也可以由第三方通过空投活动提供资金。

创建过程描述于此。

强烈建议钱包在向用户表明他们能够接收该类型的 SPL 代币(通常通过向用户显示其接收地址来完成)之前,先为给定的 SPL 代币创建关联的代币账户。选择不执行此步骤的钱包可能会限制其用户从其他钱包接收 SPL 代币的能力。

“添加令牌”工作流程示例

当用户想要接收某种类型的 SPL 代币时,应首先为其关联的代币账户注资,以便:

- 最大限度地提高与其他钱包实现的互操作性

- 避免将创建相关代币账户的成本转嫁给第一个发送者

钱包应提供允许用户“添加代币”的用户界面。用户选择代币类型,系统会显示添加代币需要花费多少 SOL 的信息。

确认后,钱包将创建如此处所述的相关代币 类型。

“空投活动”工作流程示例

对于每个收件人的钱包地址,发送包含以下内容的交易:

- 代表接收者创建相关的令牌账户。

- 使用

TokenInstruction::Transfer完成转移

关联代币账户所有权

⚠️钱包绝不应该将关联代币账户的权限TokenInstruction::SetAuthority设置 为另一个地址。AccountOwner

辅助代币账户

可以随时将现有 SPL 代币帐户的所有权分配给用户。实现此目的的一种方法是使用 spl-token authorize <TOKEN_ADDRESS> owner <USER_ADDRESS>命令。钱包应该准备好妥善管理他们自己没有为用户创建的代币帐户。

在钱包之间转移代币

在钱包之间转移代币的首选方法是转移到接收者的关联代币账户。

收件人必须向发件人提供其主钱包地址。然后,发件人:

- 为接收者获取关联的代币账户

- 通过 RPC 获取收件人的关联代币账户并检查其是否存在

- 如果收件人的关联代币账户尚不存在,则发送方钱包应按 此处所述创建收件人的关联代币账户。发送方的钱包可以选择通知用户,由于创建了账户,转账将需要比平时更多的 SOL。但是,此时选择不支持创建收件人关联代币账户的钱包应向用户显示一条消息,其中包含足够的信息,以找到解决方法来实现他们的目标

- 使用

TokenInstruction::Transfer完成转移

发送者的钱包不得要求接收者的主钱包地址保留余额才允许转账。

代币详细信息注册表

目前,Token Mint 注册中心有几种解决方案:

分散式解决方案正在进行中。

垃圾收集辅助代币账户

钱包应尽快将辅助代币账户中的资金转入用户关联的代币账户,从而清空辅助代币账户。此举有两个目的:

- 如果用户是辅助账户的关闭权限,钱包可以通过关闭账户为用户回收SOL。

- 如果辅助账户由第三方提供资金,一旦账户清空,第三方可能会关闭该账户并收回 SOL。

垃圾回收辅助代币账户的一个自然时机是用户下次发送代币时。执行此操作的附加指令可以添加到现有交易中,并且不需要额外费用。

清理伪步骤:

- 对于所有非空的辅助代币账户,添加一条

TokenInstruction::Transfer指令,将全部代币金额转移到用户关联的代币账户中。

- 对于所有空的辅助令牌账户,其中用户是关闭权限,添加一条

TokenInstruction::CloseAccount指令

如果添加一个或多个清理指令导致事务超出允许的最大事务大小,请删除这些额外的清理指令。它们可以在下一次发送操作期间被清理。

该spl-token gc命令提供了此清理过程的示例实现。

代币归属

目前有两种解决方案可用于归属 SPL 代币:

1)Bonfida 代币归属

此程序允许您锁定任意 SPL 代币,并按照确定的解锁计划释放锁定的代币。一个由一个和一个代币unlock schedule组成,在初始化归属合约时,创建者可以传递一个任意大小的数组,从而使合约创建者可以完全控制代币随时间如何解锁。unix timestamp``amount``unlock schedule

解锁的工作原理是推动合约上的无权限曲柄,将代币移动到预先指定的地址。当前接收者密钥的所有者可以修改授权合约的接收者地址,这意味着授权合约锁定的代币可以进行交易。

2)Streamflow 时间锁

使用基于时间的锁定和托管账户,可以创建、提取、取消和转移代币归属合约。合约默认可由创建者取消,并可由接收者转移。

归属合约创建者在创建时可以选择多种选项,例如:

- SPL 代币及归属金额

- 接受者

- 确切的开始和结束日期

- (可选)悬崖日期和金额

- (可选)发布频率

即将推出:

- 合同是否可以由创作者/接收者转让

- 合同是否可以由创作者/接收者取消

- 主題/記錄

资源:

参考文档

https://github.com/solana-labs/solana-program-library/blob/master/docs/src/token.mdx