由于EOS blocks数据同步到Mongo数据库后,体积很大,查询缓慢,并且机器配置比较低,同时运行nodeos写入MongoDB有点遭不住,so,部署下mongo集群,并做分片配置

1. 安装 MongoDB

准备了三台分别1T存储的云主机。三台主机在同一内网,IP分别如下

| 172.31.32.31 |

172.31.32.29 |

172.31.32.30 |

| mongos |

mongos |

mongos |

| config server |

config server |

config server |

| shard server1 主节点 |

shard server1 副节点 |

shard server1 仲裁 |

| shard server2 仲裁 |

shard server2 主节点 |

shard server2 副节点 |

| shard server3 副节点 |

shard server3 仲裁 |

shard server3 主节点 |

端口分配:

| 占用 |

端口 |

| mongos |

20000 |

| config |

21000 |

| shard1 |

27001 |

| shard2 |

27002 |

| shard3 |

27003 |

分别在每台机器建立conf、mongos、config、shard1、shard2、shard3六个目录,

因为mongos不存储数据,只需要建立日志文件目录即可。/mnt/data为机器挂载的1T存储盘

mkdir -p /mnt/data/mongodb/conf \

mkdir -p /mnt/data/mongodb/mongos/log \

mkdir -p /mnt/data/mongodb/config/data \

mkdir -p /mnt/data/mongodb/config/log \

mkdir -p /mnt/data/mongodb/shard1/data \

mkdir -p /mnt/data/mongodb/shard1/log \

mkdir -p /mnt/data/mongodb/shard2/data \

mkdir -p /mnt/data/mongodb/shard2/log \

mkdir -p /mnt/data/mongodb/shard3/data \

mkdir -p /mnt/data/mongodb/shard3/log

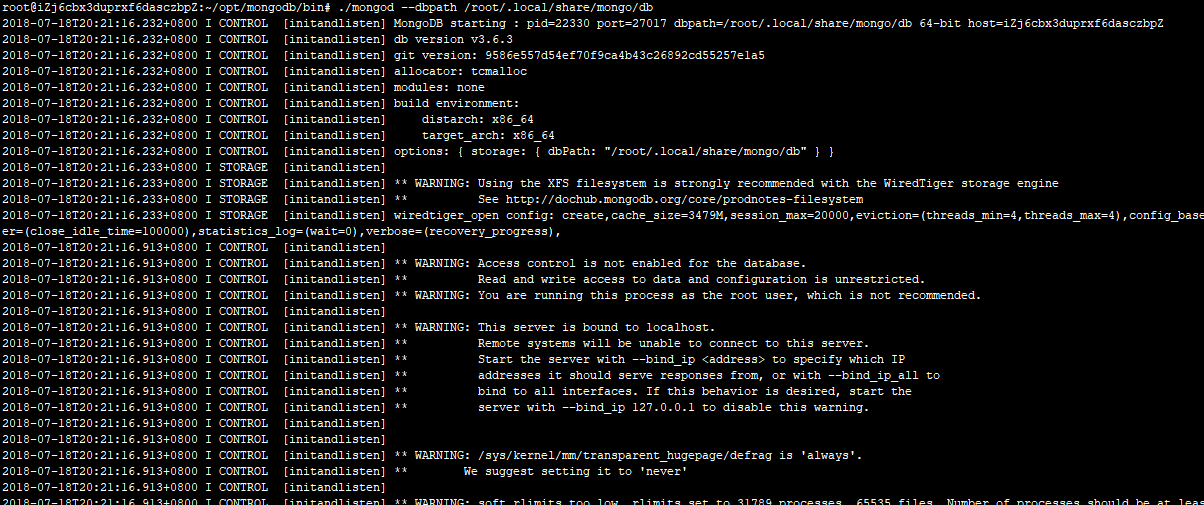

安装 mongodb-server

sudo apt install mongodb-server

配置环境变量

vi /etc/profile

# MongoDB 环境变量内容

export MONGODB_HOME=~/opt/mongodb

export PATH=$MONGODB_HOME/bin:$PATH

使立即生效

source /etc/profile

2. config server配置服务器

mongodb3.4以后要求配置服务器也创建副本集,不然集群搭建不成功。

(三台机器)添加配置文件 vi /mnt/data/mongodb/conf/config.conf

## Profile content

pidfilepath = /mnt/data/mongodb/config/log/configsrv.pid

dbpath = /mnt/data/mongodb/config/data

logpath = /mnt/data/mongodb/config/log/congigsrv.log

logappend = true

bind_ip = 0.0.0.0

port = 21000

fork = true

#declare this is a config db of a cluster;

configsvr = true

#Replica set name

replSet = configs

#Set the maximum number of connections

maxConns = 20000

启动三台服务器的config server

mongod -f /mnt/data/mongodb/conf/config.conf

登录任意一台配置服务器,初始化配置副本集

连接 MongoDB

mongo --port 21000

config 变量

config = {

_id : "configs",

members : [

{_id : 0, host : "172.31.32.31:21000" },

{_id : 1, host : "172.31.32.29:21000" },

{_id : 2, host : "172.31.32.30:21000" }

]

}

初始化副本集

rs.initiate(config)

其中,"_id" : "configs"应与配置文件中配置的 replicaction.replSetName 一致,"members" 中的 "host" 为三个节点的 ip 和 port

响应内容如下

> config = {

... _id : "configs",

... members : [

... {_id : 0, host : "172.31.32.31:21000" },

... {_id : 1, host : "172.31.32.29:21000" },

... {_id : 2, host : "172.31.32.30:21000" }

... ]

... }

{

"_id" : "configs",

"members" : [

{

"_id" : 0,

"host" : "172.31.32.31:21000"

},

{

"_id" : 1,

"host" : "172.31.32.29:21000"

},

{

"_id" : 2,

"host" : "172.31.32.30:21000"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1535116186, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1535116186, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1535116186, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configs:SECONDARY> rs.status()

此时会发现终端上的输出已经有了变化。

//从单个一个

>

//变成了

configs:SECONDARY>

查询状态

configs:SECONDARY> rs.status()

输出如下

configs:SECONDARY> rs.status()

{

"set" : "configs",

"date" : ISODate("2018-08-24T13:10:23.181Z"),

"myState" : 1,

"term" : NumberLong(1),

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "172.31.32.31:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 37,

"optime" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:10:09Z"),

"optimeDurableDate" : ISODate("2018-08-24T13:10:09Z"),

"lastHeartbeat" : ISODate("2018-08-24T13:10:22.372Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:10:21.182Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "172.31.32.30:21000",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "172.31.32.29:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 37,

"optime" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:10:09Z"),

"optimeDurableDate" : ISODate("2018-08-24T13:10:09Z"),

"lastHeartbeat" : ISODate("2018-08-24T13:10:22.372Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:10:22.128Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "172.31.32.30:21000",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "172.31.32.30:21000",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 195,

"optime" : {

"ts" : Timestamp(1535116209, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:10:09Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535116196, 1),

"electionDate" : ISODate("2018-08-24T13:09:56Z"),

"configVersion" : 1,

"self" : true

}

],

"ok" : 1,

"operationTime" : Timestamp(1535116209, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1535116186, 1),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1535116209, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

configs:PRIMARY>

3. 配置分片副本集

3.1 设置第一个分片副本集

(三台机器)设置第一个分片副本集

配置文件vi /mnt/data/mongodb/conf/shard1.conf

pidfilepath = /mnt/data/mongodb/shard1/log/shard1.pid

dbpath = /mnt/data/mongodb/shard1/data

logpath = /mnt/data/mongodb/shard1/log/shard1.log

logappend = true

bind_ip = 0.0.0.0

port = 27001

fork = true

replSet = shard1

#declare this is a shard db of a cluster;

shardsvr = true

maxConns = 20000

启动三台服务器的shard1 server

mongod -f /mnt/data/mongodb/conf/shard1.conf

登陆任意一台服务器,初始化副本集(除了172.31.32.30)

连接 MongoDB

mongo --port 27001

使用admin数据库

use admin

定义副本集配置

config = {

_id : "shard1",

members : [

{_id : 0, host : "172.31.32.31:27001" },

{_id : 1, host : "172.31.32.29:27001" },

{_id : 2, host : "172.31.32.30:27001" , arbiterOnly: true }

]

}

初始化副本集配置

rs.initiate(config)

响应内容如下

> use admin

switched to db admin

> config = {

... _id : "shard1",

... members : [

... {_id : 0, host : "172.31.32.31:27001" },

... {_id : 1, host : "172.31.32.29:27001" },

... {_id : 2, host : "172.31.32.30:27001" , arbiterOnly: true }

... ]

... }

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "172.31.32.31:27001"

},

{

"_id" : 1,

"host" : "172.31.32.29:27001"

},

{

"_id" : 2,

"host" : "172.31.32.30:27001",

"arbiterOnly" : true

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

此时会发现终端上的输出已经有了变化。

//从单个一个

>

//变成了

shard1:SECONDARY>

查询状态

shard1:SECONDARY> rs.status()

响应内容如下

shard1:SECONDARY> rs.status()

{

"set" : "shard1",

"date" : ISODate("2018-08-24T13:24:09.626Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535117039, 2),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535117039, 2),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535117039, 2),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535117039, 2),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "172.31.32.31:27001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 396,

"optime" : {

"ts" : Timestamp(1535117039, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:23:59Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535117038, 1),

"electionDate" : ISODate("2018-08-24T13:23:58Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "172.31.32.29:27001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 21,

"optime" : {

"ts" : Timestamp(1535117039, 2),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535117039, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:23:59Z"),

"optimeDurableDate" : ISODate("2018-08-24T13:23:59Z"),

"lastHeartbeat" : ISODate("2018-08-24T13:24:08.467Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:24:04.801Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "172.31.32.31:27001",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "172.31.32.30:27001",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 21,

"lastHeartbeat" : ISODate("2018-08-24T13:24:08.467Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:24:04.781Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

}

],

"ok" : 1

}

3.2 设置第二个分片副本集

设置第二个分片副本集

配置文件 vi /mnt/data/mongodb/conf/shard2.conf

pidfilepath = /mnt/data/mongodb/shard2/log/shard2.pid

dbpath = /mnt/data/mongodb/shard2/data

logpath = /mnt/data/mongodb/shard2/log/shard2.log

logappend = true

bind_ip = 0.0.0.0

port = 27002

fork = true

replSet=shard2

#declare this is a shard db of a cluster;

shardsvr = true

maxConns=20000

启动三台服务器的shard2 server

mongod -f /mnt/data/mongodb/conf/shard2.conf

连接 MongoDB

mongo --port 27002

使用admin数据库

use admin

定义副本集配置

config = {

_id : "shard2",

members : [

{_id : 0, host : "172.31.32.31:27002" , arbiterOnly: true },

{_id : 1, host : "172.31.32.29:27002" },

{_id : 2, host : "172.31.32.30:27002" }

]

}

初始化副本集配置

rs.initiate(config)

响应内容如下

> use admin

switched to db admin

> config = {

... _id : "shard2",

... members : [

... {_id : 0, host : "172.31.32.31:27002" , arbiterOnly: true },

... {_id : 1, host : "172.31.32.29:27002" },

... {_id : 2, host : "172.31.32.30:27002" }

... ]

... }

{

"_id" : "shard2",

"members" : [

{

"_id" : 0,

"host" : "172.31.32.31:27002",

"arbiterOnly" : true

},

{

"_id" : 1,

"host" : "172.31.32.29:27002"

},

{

"_id" : 2,

"host" : "172.31.32.30:27002"

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

rs.status()

查看状态信息返回

shard2:SECONDARY> rs.status()

{

"set" : "shard2",

"date" : ISODate("2018-08-24T13:34:17.459Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535117651, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535117651, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535117651, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535117651, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "172.31.32.31:27002",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 39,

"lastHeartbeat" : ISODate("2018-08-24T13:34:15.701Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:34:15.286Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 1,

"name" : "172.31.32.29:27002",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 153,

"optime" : {

"ts" : Timestamp(1535117651, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:34:11Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535117629, 1),

"electionDate" : ISODate("2018-08-24T13:33:49Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 2,

"name" : "172.31.32.30:27002",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 39,

"optime" : {

"ts" : Timestamp(1535117651, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535117651, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:34:11Z"),

"optimeDurableDate" : ISODate("2018-08-24T13:34:11Z"),

"lastHeartbeat" : ISODate("2018-08-24T13:34:15.701Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:34:16.331Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "172.31.32.29:27002",

"configVersion" : 1

}

],

"ok" : 1

}

3.3 设置第三个分片副本集

设置第三个分片副本集

配置文件 vi /mnt/data/mongodb/conf/shard3.conf

pidfilepath = /mnt/data/mongodb/shard3/log/shard3.pid

dbpath = /mnt/data/mongodb/shard3/data

logpath = /mnt/data/mongodb/shard3/log/shard3.log

logappend = true

bind_ip = 0.0.0.0

port = 27003

fork = true

replSet=shard3

#declare this is a shard db of a cluster;

shardsvr = true

maxConns=20000

启动三台服务器的shard3 server

mongod -f /mnt/data/mongodb/conf/shard3.conf

登陆任意一台服务器,初始化副本集(除了172.31.32.31)

mongo --port 27003

使用admin数据库

use admin

定义副本集配置

config = {

_id : "shard3",

members : [

{_id : 0, host : "172.31.32.31:27003" },

{_id : 1, host : "172.31.32.29:27003" , arbiterOnly: true},

{_id : 2, host : "172.31.32.30:27003" }

]

}

初始化副本集配置

rs.initiate(config)

响应内容如下

> use admin

switched to db admin

> config = {

... _id : "shard3",

... members : [

... {_id : 0, host : "172.31.32.31:27003" },

... {_id : 1, host : "172.31.32.29:27003" , arbiterOnly: true},

... {_id : 2, host : "172.31.32.30:27003" }

... ]

... }

{

"_id" : "shard3",

"members" : [

{

"_id" : 0,

"host" : "172.31.32.31:27003"

},

{

"_id" : 1,

"host" : "172.31.32.29:27003",

"arbiterOnly" : true

},

{

"_id" : 2,

"host" : "172.31.32.30:27003"

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

查看状态

rs.status()

返回信息如下

shard3:SECONDARY> rs.status()

{

"set" : "shard3",

"date" : ISODate("2018-08-24T13:45:19.572Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1535118313, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1535118313, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1535118313, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1535118313, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "172.31.32.31:27003",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 97,

"optime" : {

"ts" : Timestamp(1535118313, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1535118313, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:45:13Z"),

"optimeDurableDate" : ISODate("2018-08-24T13:45:13Z"),

"lastHeartbeat" : ISODate("2018-08-24T13:45:18.293Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:45:18.675Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "172.31.32.30:27003",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "172.31.32.29:27003",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 97,

"lastHeartbeat" : ISODate("2018-08-24T13:45:18.293Z"),

"lastHeartbeatRecv" : ISODate("2018-08-24T13:45:18.602Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 2,

"name" : "172.31.32.30:27003",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 126,

"optime" : {

"ts" : Timestamp(1535118313, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-08-24T13:45:13Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1535118232, 1),

"electionDate" : ISODate("2018-08-24T13:43:52Z"),

"configVersion" : 1,

"self" : true

}

],

"ok" : 1

}

3.4 配置路由服务器 mongos

(三台机器)先启动配置服务器和分片服务器,后启动路由实例启动路由实例:

修改配置文件vi /mnt/data/mongodb/conf/mongos.conf

pidfilepath = /mnt/data/mongodb/mongos/log/mongos.pid

logpath = /mnt/data/mongodb/mongos/log/mongos.log

logappend = true

bind_ip = 0.0.0.0

port = 20000

fork = true

#The configuration server that listens can only have 1 or 3 configs to configure the replica set name of the server.

configdb = configs/172.31.32.31:21000,172.31.32.29:21000,172.31.32.30:21000

maxConns = 20000

启动三台服务器的mongos server

mongos -f /mnt/data/mongodb/conf/mongos.conf

4. 串联路由服务器

目前搭建了mongodb配置服务器、路由服务器,各个分片服务器,不过应用程序连接到mongos路由服务器并不能使用分片机制,还需要在程序里设置分片配置,让分片生效。

登陆任意一台mongos

mongo --port 20000

使用admin数据库

use admin

串联路由服务器与分配副本集

sh.addShard("shard1/172.31.32.31:27001,172.31.32.29:27001,172.31.32.30:27001");

sh.addShard("shard2/172.31.32.31:27002,172.31.32.29:27002,172.31.32.30:27002");

sh.addShard("shard3/172.31.32.31:27003,172.31.32.29:27003,172.31.32.30:27003");

查看集群状态

sh.status()

响应内容如下

mongos> sh.addShard("shard1/172.31.32.31:27001,172.31.32.29:27001,172.31.32.30:27001");

{

"shardAdded" : "shard1",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1535118917, 6),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1535118917, 6)

}

mongos> sh.addShard("shard2/172.31.32.31:27002,172.31.32.29:27002,172.31.32.30:27002");

{

"shardAdded" : "shard2",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1535118917, 10),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1535118917, 10)

}

mongos> sh.addShard("shard3/172.31.32.31:27003,172.31.32.29:27003,172.31.32.30:27003");

{

"shardAdded" : "shard3",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1535118918, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1535118918, 5)

}

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5b8003a67629bf9778831c77")

}

shards:

{ "_id" : "shard1", "host" : "shard1/172.31.32.29:27001,172.31.32.31:27001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/172.31.32.29:27002,172.31.32.30:27002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/172.31.32.30:27003,172.31.32.31:27003", "state" : 1 }

active mongoses:

"3.6.3" : 3

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

5. 启用集合分片生效

目前配置服务、路由服务、分片服务、副本集服务都已经串联起来了,但我们的目的是希望插入数据,数据能够自动分片。连接在mongos上,准备让指定的数据库、指定的集合分片生效。

登陆任意一台mongos

mongo --port 20000

使用admin数据库

use admin

指定eos数据库分片生效

db.runCommand( { enablesharding :"eos"});

指定数据库里需要分片的集合和片键,哈希id 分片

db.runCommand( { shardcollection : "eos.blocks",key : {"_id": "hashed"} } );

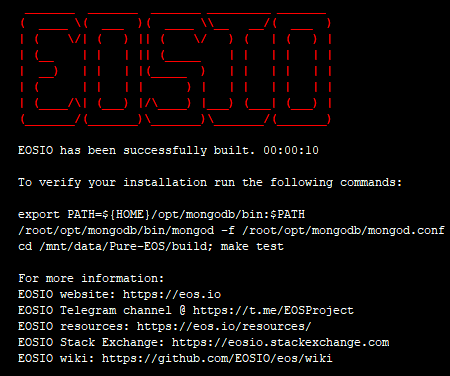

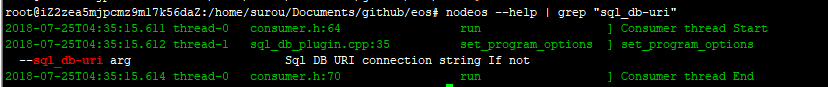

6. 修改eos config.ini

三台机器其中一台,运行nodeos并同步blocks数据到mongodb

plugin = eosio::mongo_db_plugin

mongodb-uri = mongodb://localhost:20000/eos

重置数据

nodeos --data-dir /mnt/data/data --hard-replay-blockchain --mongodb-wipe

需要清空数据的话加下 --mongodb-wipe

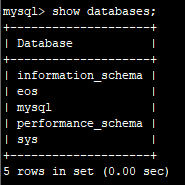

然后查看任意一台mongo数据库

mongo --port 20000

mongos> show databases;

admin 0.000GB

config 0.001GB

eos 0.071GB

已经正确分片存储

参考:segmentfault