pgbench 是一个用于测试 PostgreSQL 性能的工具。它可以用于测试 PostgreSQL 的连接稳定性,方法是启动多个连接并进行持续的查询

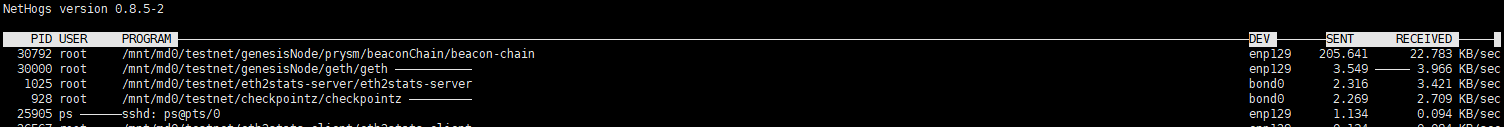

linux查本地服务流量

安装

sudo apt install nethogs测试

Polygon zkEvm链区块不可逆判断

区块会经过两个步骤

eth_blockNumber->zkevm_isBlockVirtualized->zkevm_isBlockConsolidated

zkevm_isBlockVirtualized 到达该阶段说明数据已提交到L1层,当到达后,出现不一致概率极低,比如版本升级异常等,才会出现数据不一致

zkevm_isBlockConsolidated zkp 证明已生成,不会再发生数据不一致

每个阶段具体时间隔间,要看具体节点配置,比如当前测试环境

zkevm_isBlockVirtualized:15s

zkevm_isBlockConsolidated:10-20分 (算力充足情况下)

查询RPC

https://www.quicknode.com/docs/polygon-zkevm/zkevm_isBlockVirtualized

https://www.quicknode.com/docs/polygon-zkevm/zkevm_isBlockConsolidated

invalid opcode: opcode 0x5f not defined

问题

在低版本geth(quorum)上使用高版本solidity合约和编译(0.8.20及以上),部署时报以下错误

Gas estimation errored with the following message (see below). The transaction execution will likely fail. Do you want to force sending?

Returned error: invalid opcode: opcode 0x5f not defined

OR the EVM version used by the selected environment is not compatible with the compiler EVM version.分析

原因是因为当前版本Evm未支持eip-3855

查看代码,代码未支持 PUSH0 0x5f

https://github.com/Consensys/quorum/blob/643b5dcb8e2f0c68a8c78dc01c778a11f0ed14ba/core/vm/opcodes.go#L109-L121

const (

POP OpCode = 0x50

MLOAD OpCode = 0x51

MSTORE OpCode = 0x52

MSTORE8 OpCode = 0x53

SLOAD OpCode = 0x54

SSTORE OpCode = 0x55

JUMP OpCode = 0x56

JUMPI OpCode = 0x57

PC OpCode = 0x58

MSIZE OpCode = 0x59

GAS OpCode = 0x5a

JUMPDEST OpCode = 0x5b

)对标以太坊,https://github.com/ethereum/go-ethereum/pull/24039/files

添加了对eip-3855的支持

后续

将EIP合并,并测试

PrometheusAlert是开源的运维告警中心消息转发系统

PrometheusAlert是开源的运维告警中心消息转发系统,支持主流的监控系统Prometheus、Zabbix,日志系统Graylog2,Graylog3、数据可视化系统Grafana、SonarQube。阿里云-云监控,以及所有支持WebHook接口的系统发出的预警消息,支持将收到的这些消息发送到钉钉,微信,email,飞书,腾讯短信,腾讯电话,阿里云短信,阿里云电话,华为短信,百度云短信,容联云电话,七陌短信,七陌语音,TeleGram,百度Hi(如流)等。